Introduction:

As artificial intelligence becomes more powerful, it is also becoming harder to understand.. Many AI systems make decisions in ways that are not always clear to users.. This lack of transparency creates confusion and mistrust.. That’s where explainable AI plays an important role..

AI is now being used to make important decisions like predicting the diagnosing diseases, and even hiring employees.. While these tasks were once handled by humans, machines are now taking over.. But without understanding how these decisions are made, users feel left out.. Explainable AI helps solve this problem by making AI decisions easier to follow..

When AI systems are clear and transparent, people trust them more.. Businesses can rely on AI not just for speed and accuracy, but also for fairness.. Clients want to know why a decision was made, not just the result.. That’s why building AI systems with explaination is important for the beginners long-term success..

What is explainable AI?

Explainable AI means designing AI systems is a platform where people can easily understand how decisions are made.. Unlike black-box models that just gives results without showing how they got there, explainable AI gives better reasons behind every decision.. This helps users know what the AI is doing and why do this?

Transparency in AI is important for many reasons.. It helps catch and fix any biases in the data or system.. When people understand how AI works, they can work better with it and feel more confident using it.. Explainable AI also makes it easier to find and fix errors, making the system more reliable and ethical for everyone..

Why explainable AI matters

Explainable AI matters because it helps people trust the decisions made by machines.. Today, AI is used in sensitive areas like health diagnoses, loan approvals, and hiring processes.. If people don’t understand how these decisions are made, they may feel unsure or even reject the results.. Explainable AI solves this by making the system clear and transparent so that users can trust what the AI is doing..

Another reason explainable AI is important is because it makes AI more responsible.. It allows developers to find and fix errors or biases and helps companies follow legal rules like GDPR.. Explainable AI also supports better performance because when we understand how AI thinks, we can improve it.. Most importantly, it helps organizations build AI systems based on fairness and accountability..

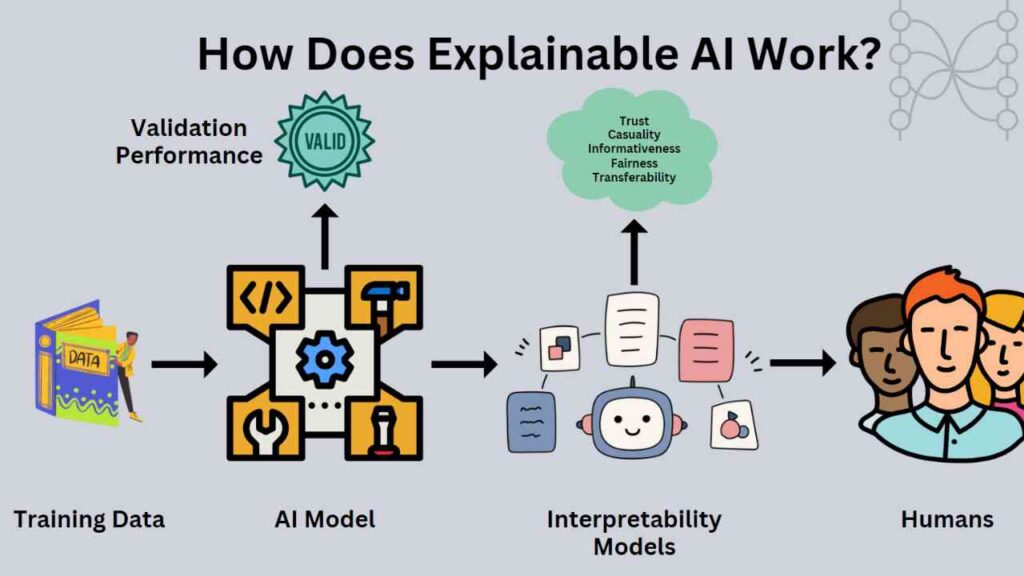

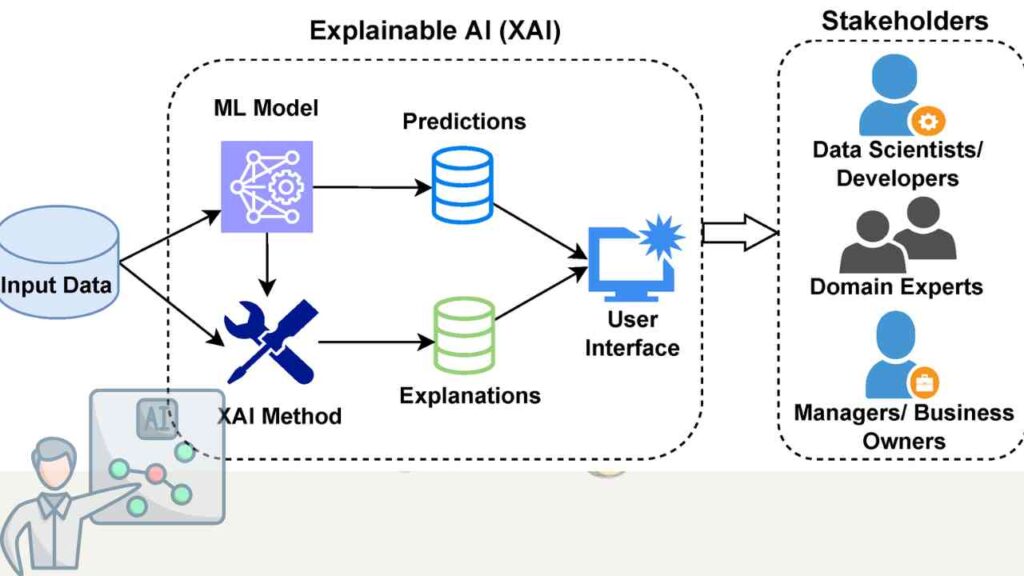

How Explainable AI Works?

Explainable AI works by helping us understand how an AI system makes decisions.. Unlike black-box models that hide the logic behind their results, explainable AI opens things up like a glass box.. It shows the steps and reasons behind predictions so that humans can follow and trust the process.. This makes AI more useful, especially in areas where accuracy and clarity matter..

To make explainable AI possible, different techniques are used like data visualization and special algorithms that explain how results were reached.. These tools help people interpret machine learning processes clearly.. Explainable AI ensures that even as AI becomes more powerful, we stay in control and can check if the results are fair and correct..

AI vs Explainable AI (XAI) – Short Comparison

| Point | AI | XAI |

| Meaning | Smart systems that make decisions | AI that explains how decisions are made |

| Transparency | Works like a black box | Works like a glass box |

| Trust | Hard to trust due to no explanation | Builds trust with clear reasoning |

| Error Checking | Difficult to detect mistakes | Easier to find and fix errors |

| Best Use | General tasks and automation | Sensitive areas like health and law |

| Goal | Focuses on performance and automation.. | focuses on understanding, fairness, and responsible AI.. |

| Error Detection | Hard to identify mistakes or biases in decisions.. | Easier to spot errors and fix them with human feedback.. |

Applications of Explainable AI:

1. Healthcare:

- Explainable AI helps doctors understand why an AI system suggested a specific treatment..

- It gives clear insights into how drugs are discovered using AI models..

- Builds trust by making AI decisions traceable in patient care..

2. Finance:

- AI credit scores can be confusing, but explainable AI shows why a loan was approved or rejected..

- Helps detect fraud by showing which activity triggered an alert..

- Supports fair decision-making and reduces bias..

3. Criminal Justice:

- Explains how an AI system assessed a criminal’s risk level..

- In predictive policing, it helps understand how crime hotspots were identified..

- Promotes fairnes in legal systems..

4. Autonomous:

- Self-driving cars use explainable AI to justify actions like turning.

- Helps engineers improve safety by reviewing why a certain decision was made..

- Builds user trust in AI-driven vehicles..

5. Customer Service:

- AI chatbots can use explainable AI to explain their responses to users..

- Improves customer satisfaction and transparency in AI communication..

- Helps customer support teams monitor AI effectiveness..

6. Cybersecurity:

- Shows how cyber threats are detected and blocked by AI systems..

- Makes security actions more understandable to IT teams..

- Increases trust in AI-driven security tools..

7. Recruitment:

- AI hiring tools can explain why a candidate was shortlisted or rejected..

- Explainable AI reduces bias in resume screening and interviews..

- Ensures fair and transparent hiring processes..

Read more: In today’s fast-paced digital world,, content creation and management can be overwhelming.. Fortunately,, AI tools for content scheduling and management are revolutionizing how marketers,, bloggers,, and businesses organize their content strategy.. By leveraging AI-driven tools,, you can automate scheduling,, improve content workflow,, and optimize engagemen t.. In this article,, we will explore the best AI tools for content scheduling,, how they work,, and why they are essential for content creator s today.

8. Forensics:

- Assists in digital evidence analysis and video processing..

- Helps explain how AI links suspects or events..

- Supports cybercrime investigations with clear logic..

9. Other Areas:

- Used in agriculture, education, industry, and even entertainment..

- Increases clarity in AI systems used for farming, teaching tools, and smart factories..

- Encourages safe and ethical AI adoption across all sectors..

Benefits of explainable AI:

Benefits of Explainable AI (XAI)

1. Better Understanding:

- Explainable AI helps users clearly understand how and why a model made a certain decision..

- It removes confusion and builds trust between humans and AI systems..

2. Promotes Fairness:

- XAI shows how different groups (like age, gender, or background) are treated by the AI..

- This helps in reducing bias and supports fair decisions..

3. Easier Debugging:

- If something goes wrong, explainable AI helps find the exact reason behind wrong or strange results..

- It helps improve the model by identifying hidden errors or weak areas..

4. Builds Trust in AI:

- When AI systems are transparent, businesses and users trust them more..

- XAI helps companies launch AI models faster with more confidence and less fear..

5. Faster and Smarter Results:

- With explainable AI, you can keep an eye on model performance over time..

- It helps improve AI accuracy and saves time in getting better results..

6. Reduces Risk and Cost:

- XAI helps meet legal and business rules like compliance and audit checks..

- It avoids expensive mistakes and reduces the need for manual model checks..

Read more: AI tools for small business are transforming the way entrepreneurs operate, especially in India where digital adoption is on the rise.. In 2025, these tools have become smarter, more affordable, and incredibly user-friendly.. From automating customer support to generating content and managing finances, AI is now a necessity, not a luxury.. Indian small businesses, startups, and solopreneurs can now leverage AI to compete with big players efficiently.. This article will explore the best AI tools available today for Indian small business owners, complete with updates, comparisons, and practical tips.

XAI Tools and Libraries for Developers:

Explainable AI tools and libraries help developers better understand how complex AI models make decisions.. These tools give clear insights into AI’s inner workings, making it easier to find problems that might reduce performance.. With explainable AI, engineers can analyze the whole AI system and spot where things go wrong..

As AI models become more advanced and complicated, they also become harder to understand and control.. Explainable AI helps break down these complex models, making them less like black boxes and more transparent.. This clarity allows developers to fix issues faster and improve the model’s accuracy..

Using explainable AI tools, developers and experts can work together to solve problems in AI systems that don’t act as expected.. These tools meet the growing need for transparency in AI engineering, helping build trustworthy and effective AI solutions that perform well and can be confidently used..

Debugging XAI Models Common Issues and Solutions:

Debugging explainable AI models means finding and fixing problems to make them more accurate and reliable.. Common issues include unclear or inconsistent explanations, bias, and performance drops.. Using explainable AI helps developers see why a model made certain decisions, making it easier to spot errors or biases.. For example, if an AI wrongly labels a cat as a dog, explainable AI can show what influenced that mistake.. Fixing these issues involves simplifying explanations, checking consistency, and improving the model’s design.. This process is important to build AI systems people can trust..

Implementing XAI in Different AI Models:

Explainable AI (XAI) helps make AI decisions clear and understandable by using methods like LIME and SHAP.. It ensures transparency, builds trust, and supports better decision-making across different AI models..

Explanation Points:

1.. Understanding Your Needs:

- Identify who needs explanations (e..g.., users, developers, regulators)..

- Consider the AI model’s application and possible risks..

2.. Choosing XAI Techniques:

- Model-Agnostic Methods: Work for all models; LIME and SHAP explain feature influence..

- Model-Specific Methods: Use model structure (like decision trees) for explanation..

- Post-Hoc Techniques: Explain predictions after they’re made using visuals like heatmaps..

3.. Tools and Implementation:

- Use libraries like LIME, SHAP, and ELI5 for easy integration..

- Use built-in tools in models like decision trees for feature importance..

Testing and refinement:

- Improve explanations with user feedback..

- Use XAI to find and fix biased predictions..

5.. Communication:

- Match explanation style to the audience’s understanding..

- Clear explanations help build trust in AI systems..

The Future of Explainable AI:

- Human-Centered explaination: Future explainable AI will focus on giving easy-to-understand explanations to each user, using simple language and technical tools..

- XAI-by-Design: AI models will be built with explaination in mind from the start, making it easier to see how decisions are made..

- Counterfactuals Reasoning: Explainable AI will show how changes in input affect decisions and explain the reasons behind those decisions are cleared.

XAI Toolbox: New methods and technical tools will keep improving how AI explanations are delivered..

- Regulatory Pressure: More laws will require expaination in AI, especially for important uses like healthcare or finance and agriculture.

- Interdisciplinary Collaboration: Experts from different people fields will work together to solve the challenges and improve explainable AI..

- Building Trust : XAI helps people trust AI by making decisions clear, especially when the expectation are high..

- Addressing Bias: It helps spot and fix unfair biases in AI,, promoting fairness..

- Ensuring Compliance: XAI helps companies follow laws about transparency in AI..

- Improving Model Understanding: XAI makes it easier for developers too understand and improve AI models..

Conclusion:

Explainable AI (XAI) is vital for building AI systems that people can trust.. It makes AI decisions clear and understandable, helping users and developers improve fairness and accuracy.. As AI grows, explainable AI ensures these systems stay ethical and aligned with human values.. Without XAI, AI would remain confusing and less reliable.. Embracing explainability is key to a safer, fairer AI future..